Thursday, December 24, 2009

ISP Performance Testing Day 3

VDI Interesting Reads

Tuesday, December 22, 2009

Day 2 testing

Monday, December 21, 2009

New Place, new Internet Connection

Saturday, December 12, 2009

Google Chrome OS and the Microsoft EC case re: browser integration

Saturday, December 05, 2009

Syncing your Snow Leopard Mac with Amazon S3

First a bit of history, I have had an Amazon S3 account for a while now, and as per another blog entry, I've been trying to put it to good use in concert with my MacBook. In the past I've tried to get MacFUSE with s3fs tools to work, however that wasn't so easy and haven't found any evidence on the web that anyone has been successful in getting this to work (unless they're not telling of course).

I did find some people having success with command line tools such as s3sync (a Ruby based script) and s3cmd, which is also ruby based.

The article that got me going on this solution is found here.

What does it do?

Ideally what I wanted is mount an S3 bucket as a drive that is available as an icon in the finder. Much like what you get when you have a MobileMe account and get iDisk. The solution presented here does not give the same functionality as that, but its close.

What this solution does is sync a folder of your choice to your S3 bucket of choice. The way it does this is using an upload script that syncs the content of your local folder of choice (this is a shell script that calls the s3sync tool). This script is ran by Launchd (based on a proper plist file to configure it) whenever it sees a change is made in your folder of choice.

Unfortunately this does not take into account any changes made in nested folders…

At the moment the only way to sync the whole folder is triggering it by putting a file in your folder of choice which you can then later be deleted. Another solution might be to have a script being run every 5 mins or so, based on whether there is network connectivity. On Linux it could be made more sophisticated with the use of inotywait. Apparently on Mac OS there is an equivalent going by the name kqueue, but I have yet to find out how to work this in a shellscript (if even possible).

Until I've found a better way, this is the way I do an automated full recursive folder sync.

Prerequisites

- An Amazon S3 account, which once create will give you an access ID and a secret key you need in the programs below. If you don't have one, you can apply for it here.

- A copy of s3sync, get it here and also take the time to read the README file.

Optionally

- s3cmd which you can get here. This command line utility is handy to check your work as you progress.

- Apple's Property List Editor (comes with the XCode developer tools, which you find on your installation DVD). This come in handy to create a plist config file for LAUNCHD. You can also get a shareware copy of a similar program here.

The optional tools are not required to get this solution going, but they do come in handy when debugging your work and for creating/editing plist files easily (this can also be done using a standard command line texteditor such as nano or pico).

Ok, so how do we put all this stuff together?

First of all you need to do all this on the command line, which you get by running the Terminal application (found in your Utilities folder which is in your Application folder).

Step 1; Setting up s3sync

First of all you need to download the tool. I have installed the tool in my home directory.

$ wget http://s3.amazonaws.com/ServEdge_pub/s3sync/s3sync.tar.gz

$ tar xvzf s3sync.tar.gz

The 2 commands above will create a directory called s3sync. You can now clean up (remove the zip file you downloaded) like so:

$ rm s3sync.tar.gz

The following 2 steps are optional, if you want your up/downloads to be encrypted through SSL. You'll need to download some certificates for SSL to work. First create a directory in your s3sync directory to store the certificates, like so:

$ cd s3sync

$ mkdir certs

$ cd certs

$ wget http://mirbsd.mirsolutions.de/cvs.cgi/~checkout~/src/etc/ssl.certs.shar

The run the following commands

$ sh ssl.certs.shar

$ cd ..

These command install the certs and get you back into the s3sync directory.

Right now would be a good idea to create the folder you are going to sync. I created one in my home folder that I called "backup".

Also create a bucket on Amazon S3 and in that bucket, create a directory that you'll use as a target for the syncing. Name that directory the same as your folder of choice (of course, no spaces in the folder name).

Next you need to create two shell scripts.

The first can be called upload.sh (or whatever you prefer) with the following content:

#!/bin/bash

# script to upload local directory upto s3

cd /path/to/yourshellscript/

export AWS_ACCESS_KEY_ID=yourS3accesskey export AWS_SECRET_ACCESS_KEY=yourS3secretkey export SSL_CERT_DIR=/your/path/to/s3sync/certs

ruby s3sync.rb -r –ssl --no-md5 --delete ~/backup/ syncbucket:backup

# copy and modify line above for each additional folder to be synced

The second script can be called download.sh and is the same as the script you created above, so do a cp upload.sh download.sh and simply change the last line as follows:

ruby s3sync.rb -r –ssl --no-md5 –delete –make-dir syncbucket:backup ~/

So the only difference being the source and destination of the sync having been swapped.

The final thing to do for security (don't want anyone to peek at your Amazon key and secret):

$ chmod 700 upload.sh

$ chmod 700 download.sh

This ensures that only you (your account rather) can read these files.

Step 2; Creating a Launchd job to keep your directory synced

This part of the solution was inspired by a rather old, but still relatively useful posting here that explains the workings of Launchd and whose example is exactly what we need for this solution.

Basically we'll need to create a .plist file that we will place in a folder that may not yet exist:

Your home folder/Library/LaunchAgents

If it doesn't, create it now.

Then we create the .plist file in this directory as follows. You can use nano for instance and name it your.domain.s3sync.plist

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd">

<plist version="1.0">

<dict>

<key>Label</key>

<string>your.domain.s3sync</string>

<key>ProgramArguments</key>

<array>

<string>/Users/youraccount/s3sync/upload.sh</string>

</array>

<key>WatchPaths</key>

<array>

<string>/Users/youraccount/backup</string>

</array>

</dict>

</plist>

The last thing you need to do is tell launchd to load this script and go watch that folder of choice. This is done like so:

$ launchctl load ~/Library/LaunchAgents/your.domain.s3sync.plist

And you're all set!

Improvements

- Recursive triggering of the script

- More secure setting of the environment variables (currently contained in the script, which is only readable by your user account)

- …

Monday, June 29, 2009

Cloud Computing Review

A comparison is made with Microsoft's Azure and Google's App Engine which in my humble opinion is comparing apples and oranges. As it stands, Amazon's service is more an Infrastructure as a Service, where is Microsoft and Google's respective offerings are more Platforms as a Service. They provide environment in which you can start coding immediately and not have to deal with the mechanics of individual server instances that have to be prep'ed with the dev platform of your choosing (the Amazon approach). Yes, you don't have to start completely from scratch, you can build on someone else's image, if it would suite your way of work...

Also the article, as far as I've been able to establish, contains an inaccuracy. It states that you can choose machine images with Windows 2008 Server, however there are currently no Windows 2008 images available on Amazon.

Monday, June 08, 2009

Amazon S3 Cloud Storage on Leopard 10.5.7; a European War Tale

Today I decided I wanted a better, more intuitive way to interact with S3 starting with my Mac (which I use most often). There were a number of items needed:

1. MacFUSE; a Filesystem User Space Environment

2. S3FS from Google Code, which needs to be compiled.

The whole process is all described here, so I won't repeat that.

Once you have installed MacFUSE and compiled the s3fs program, you are ready to mount your own S3 bucket and start filling it up with either backups (rsync'ing it for instance) or just as an extra HD. You could even mount multiple buckets.

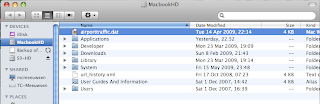

The picture below shows what it looks like.

One point of note; I was unsuccessful trying to mount buckets located in Europe. It gave me a 301 error and it is unclear why. I saw a discussion in the Amazon AWS dev forum, but there was no answer from Amazon even...

So for now, use only US buckets and this should work.

UPDATE:

It turns out it doesn't work... Eventhough the S3 bucket gets loaded, it doesn't accept any files, nor can we create directories. So far I've been unable to determine the cause of this problem. It seems that there is a paid version of S3fs but the pricing ($129) seems disproportionate to its utility... the forums for s3fs also seem void of clear guidance on what the problem could be. So far I've been able to determine that there is an issue with requests to Amazon S3 that this program generates. What I don't understand is that not more people have complained about this so I'm guessing that there may be a version issue with underlying libraries being used by S3fs that only I (and 2 others seem to be experiencing)...

Wednesday, May 06, 2009

Bandwidth Testing part 2

And below the results from Speedtest.net whom btw make use of the same software but a different server infrastructure I'm sure. The interesting thing is that their results are actually better than those measured solely on Telenet's network (although only marginally so).

The above results are measured on an Asus EEE PC 900 (with built-in wifi of the 802.11b/g denomination).

The results below here are measured on a Macbook running Mac OS X 10.5.6 and a built-in Airport Extreme of the 802.11n (draft) denomination...

And again using the ISP's meter:

Ok so clearly the Asus EEE PC 900's wifi is the bottleneck in these tests and the Macbook is able to use bandwidth all the way up to the ISP's limits...

I wonder if my abhorrent download results earlier were a function of limits at the server I was downloading from or if there was something else limiting going on... hard to troubleshoot these transient type issues.

Anyhow, back to report these results to the support folks @ my ISP....

To be continued....

Tuesday, May 05, 2009

Bandwidth Testing

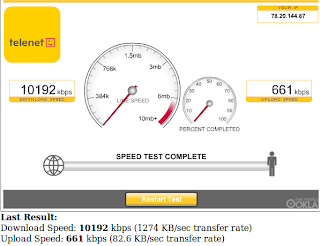

I have a so called Turbonet subscription which entitles me (according to Telenet's) latest being adertized on their website to 25Mbps download speed. Originally was sold to me as 20Mbps tho.

The main thing I've noticed is download speeds have become irratic and slow at times.

It so happens that a little while go a started measuring my dl speeds and at first I got this:

The download seems fairly close to what is to be expected, the upload speed tho seems only half of what is to be expected.

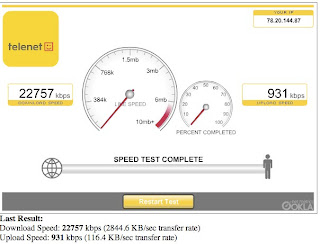

The second measurement (which was actually earlier this evening (on a Windows 7 machine):

This shows less then half of what its supposed to be...

I then later did a second test from my Ubuntu Netbook and got the following result:

Very similar to the results earlier from the Windows machine so I'm ruling out any computer related problems.

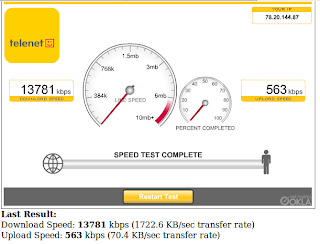

Finally the result from my ISP's bandwidth meter:

So there my upload speed dropped again, otherwise its close to the other 2 results of this evening.

Now I'm supposed to reset my router, which I will do tomorrow. This reset is supposed to load the router with its latest firmware according to my ISP.

I'll retest tomorrow, to be continued....

Wednesday, April 01, 2009

Advances in Virtualization

An interesting dicussion by Scott Lowe, about some of the technologies involved can be found here. It is indeed more profound then Cisco simply entering the bladeserver market.

It's essentially the birth of a next-gen virtualization eco-system that takes virtualization to the next level. Of course we're only just standing at the virtual craddle of this new baby, but given how the various developments are coalescing, it's quite clear that this may in fact be a new-wave of virtualization, in bringing the infrastructure for Compute, Storage and network to new optimal performance levels, while promising to ease the burden of management. Now we see how the traditional server vendors react...

-- Post From My iPhone

Wednesday, March 04, 2009

Next Generation Virtualization Management

- efficieny

- speed of change

and opposite

- control

- manageablility

Virtualization and Automation helps.

Virtualization both introduces new elements to be managed as well as

The overall sol'n should be SLA driven:

- availability

- security

- performance

It should be utility like.

It consists of the VDC- OS and the management layer.

The VDC-OS has already built-in management like VMotion and DRS that ease the amount of mngmt humans have to do.

vCenter becomes the centralized management platform of the virtual dayaventer. Aside frm operational management tasks it also needs to provide the means to track how we're doing against SLA.

On the other hand the user becomes enabled.

Lab manager will serve as the means for this in the future.

Chargeback will also be provide supporting various models.

Even for those not ready to cross charge internally it may serve as a way to create awareness of the cost of usage of resources.

Life Cycle manager is key to automate provisioning and tracking changes throughout a VM's life right through to decommissioning.

This product tracks configs of servers.

For capacity management there will be Capacity IQ

It can predict when you'll run out of capacity and it identifies overprovisioned VM's.

In order to scale-out you can use vCenter linked mode. In case you run out of max number of supported objects a vCenter can manage.

Orchestrator allows you to apply actions across a large scale of machines automatically using a workflow process. For instance to apply a changes across a large number of VM's

With Appspeed we can garantee SLA's at the app level. Do assured mogrations and do root cause analysis for performance issues.

VMware aims to work with partners to integrate with the big 4 management platforms.

There are integrations, examples

- BMC remedy and life cycle manager

- CA data center manager (for apps) and stage manager

PSO have operational readiness assessments to help achieve the above.

Tuesday, March 03, 2009

The Financial Meltdown; here's why

Saturday, February 28, 2009

SQL performance

Problem is a reduction in storage spindles is one source of performance problems. Queues can also be a problem.

Sequential reads drop when there are more hosts added to your vmfs volume.

One way to fix this is to set memory reservations.

This pitfall is someone using the hosted product and creating a misconception about ESX.

Newer procs are always better. Decrease in pipeline lengths and increase in caches.

Dell's DVD store is a great benchmarking tool.

Servers that had multiple SQL instances are best broken down in multiple VM's.

When doing PoC's ensure you use target HW for correct modelling.

There is a white paper on VMware.com about this topic.

CapacityIQ

The problem this tools tries to address; Basically we tend to overprovision because we don't have the right tools to predict better.

Virtualization obviously helps in this area.

There are multiple considerations in virtuality

- resource dependencies

- workload mobility

- Cpu and memory optimizations (memory ballooning)

- storage optimizations (thin provisioning linked clones)

The key is that the envirornment is dynamic.

Again SLA's are key and need to be guarded.

The architecture:

Do what if scenarios. Capacity dashboard

You can set alert triggers, fi send me an email when capacity reaches 80%.

Example of a whatif report

The focus is on intelligent planning using trending and it gives tools to optimize your current environment by identifying idle VM's f.i.

How the products work together.

Virtualization Optimization Capacity Mgmt best practices SAS institute

Some of their findings:

- Memory is more important than CPU

- Memory tracking is important

They started very conservative (as most when new to virtualization) and started with a ratio of 11:1. They then used the SAS tool to track performance and behaviors and from the statistics were able to gather that they weren't using their virtual infrastructure optimally. They the determined that they could increase their consolidation ratio; from 11:1 to 20:1.

The tool name is: SAS IT intelligence for VMware VI.

Along the way they also discovered that the CPU ready measurement is an important measurement to track.